Ted Wang a master of none

Autonomous Robot Assisted Physical Rehabilitation

BAXTER! This is a story about the friendship between human and robot. I will tell the story but first let me take a selfie…

Ok! So I first met BAXTER in the Rehabilitation Robotics Lab at University of Pennsylvania. Thanks a lot to Dr Michelle Johnson to supervise and introduce me to this dear friend of mine.My first impression of him is that he is such a gentleman. He smiles to kids, shakes hands with strangers and listens quietly when people talk. After our brief introduction and quick exchange of ideas, we immediately decided to collaborate on the research of robot-human physical therapy automation.

Goal

BAXTER is a noble robot who decided to devote his electrical life to help stroke survivors to recover and get back to their normal lives. Me, being a geek, also want to help him to realize his goal with all my technical supports. To be more specific, the goal is to help stroke survivors with impaired upper limbs to recover by doing upper limbs physical rehabilitation conducted by a robot therapist (AKA BAXTER :D).

Challenges

Life is just too boring without challenges. I’m pretty sure that what BAXTER thought too. We faced couple of difficulties at the time.

- How does BAXTER know what is the correct upper limb motion?

- How to detect patient’s arm motion?

- If the motion is incorrect, how does BAXTER correct it?

Design

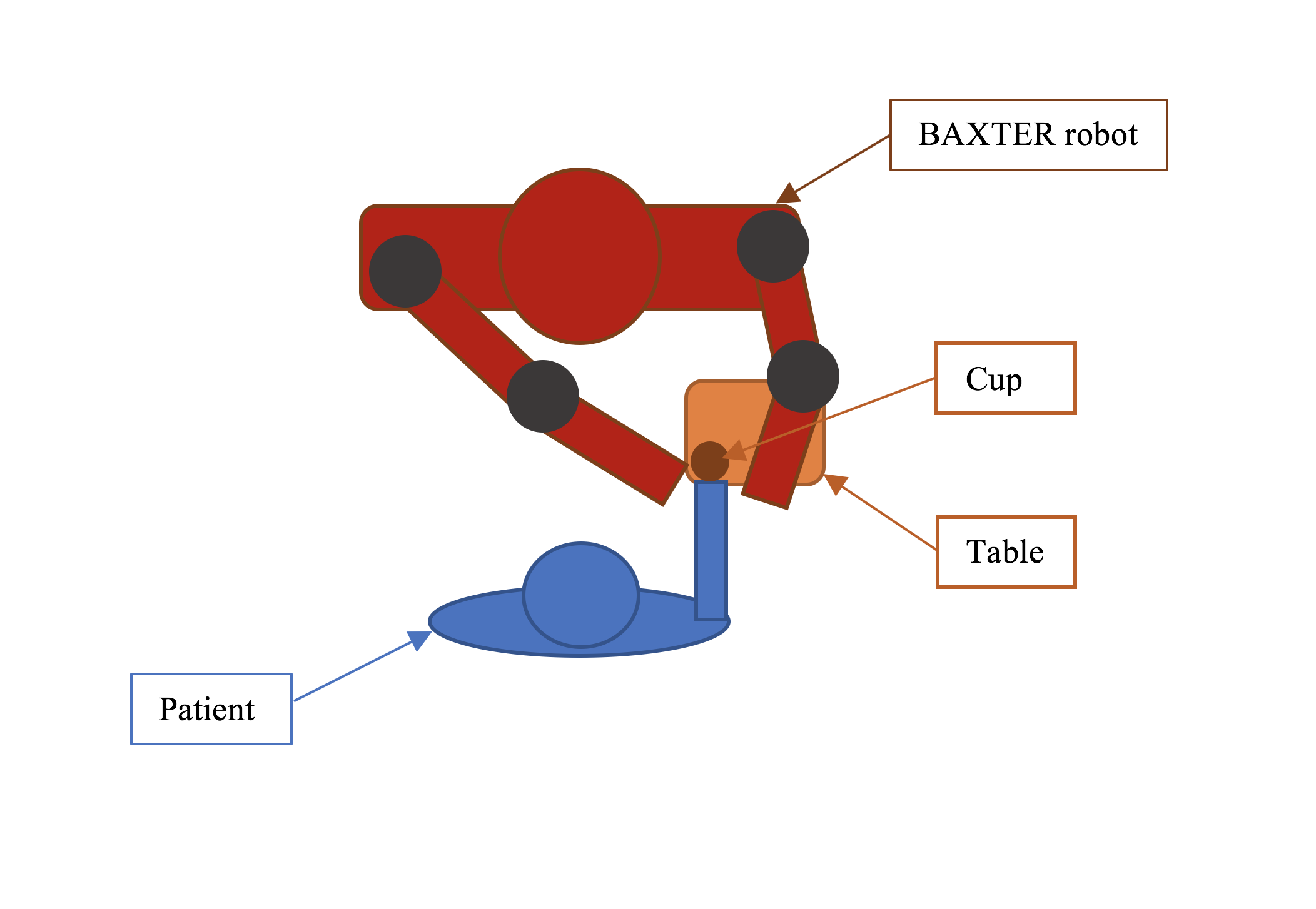

To keep things simple, we design the rehabilitation session to be a task-oriented rehabilitation. The patient needs to perform a simple upper limb task, picking up a coffee cup from the desk. As the therapist, BAXTER’s duty is to monitor patient’s arm motion and correct the patient when his arm is out of the correct trajectory. The setup looks like this:

Training

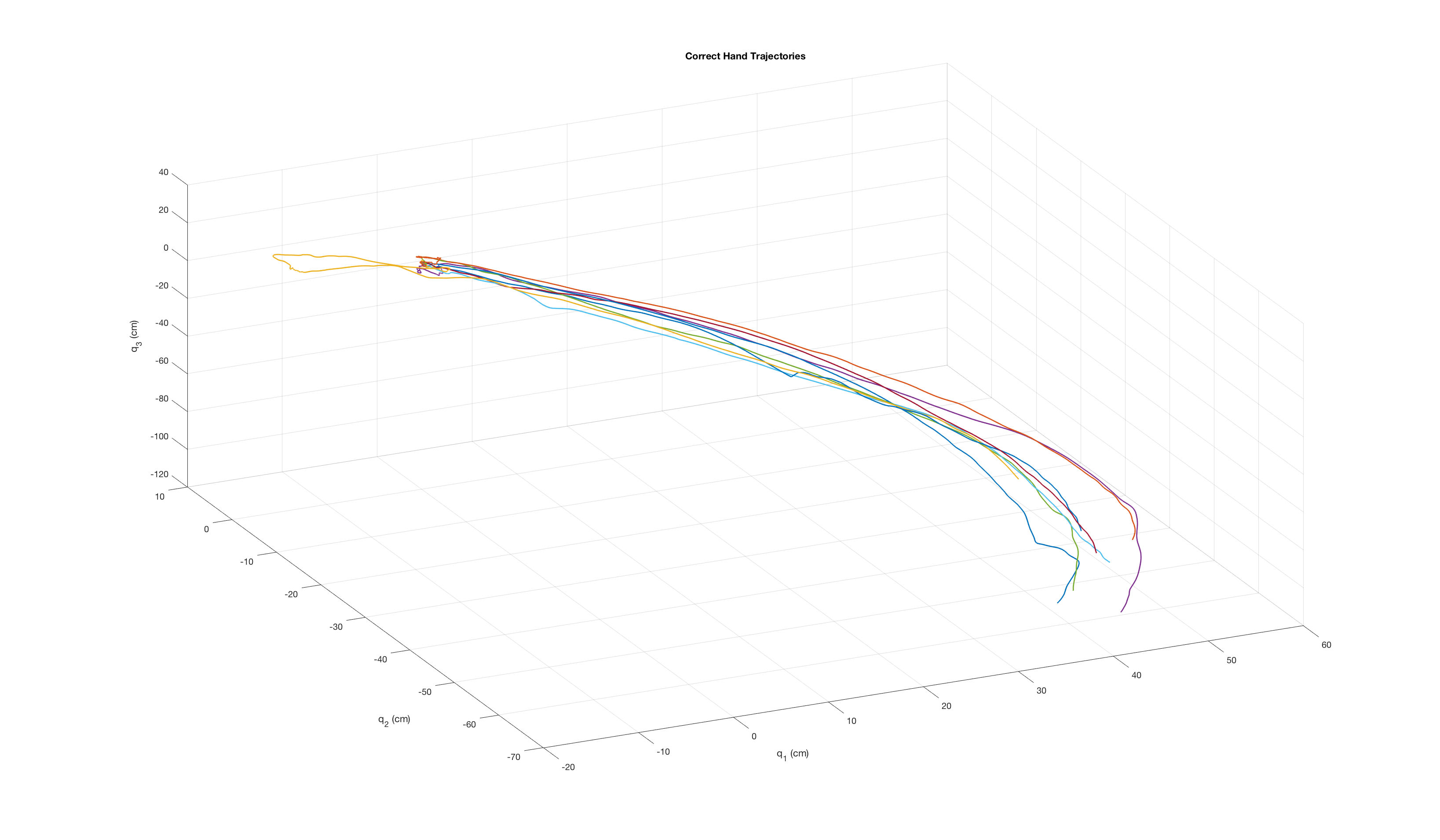

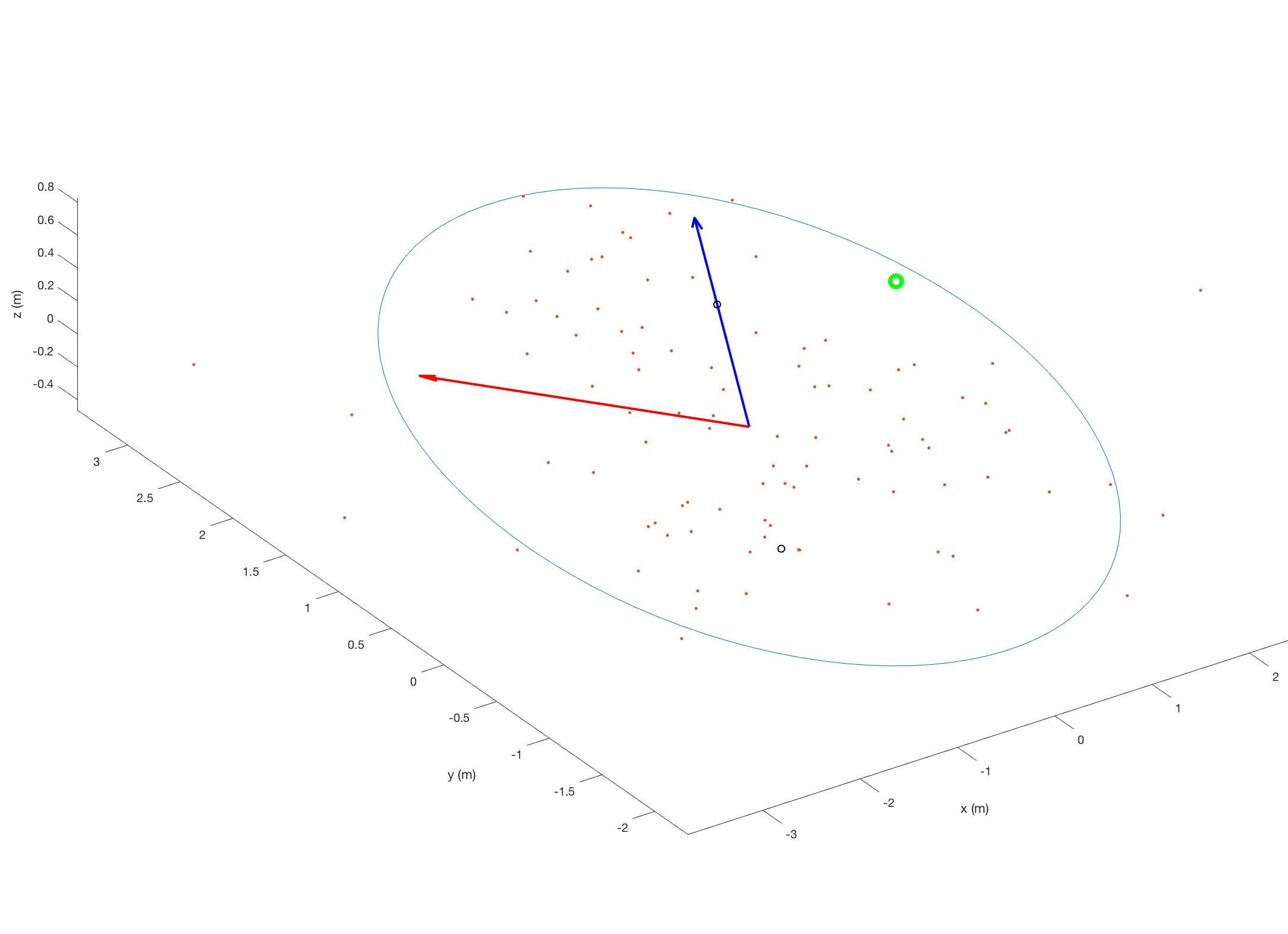

The answer to the first question that we came up is training by demonstration. We can train BAXTER with correct motion and let him realize what is the correct motion range. Without getting too technical, this is ten trajectories of the correct motion to pick up a coffee cup on the table.

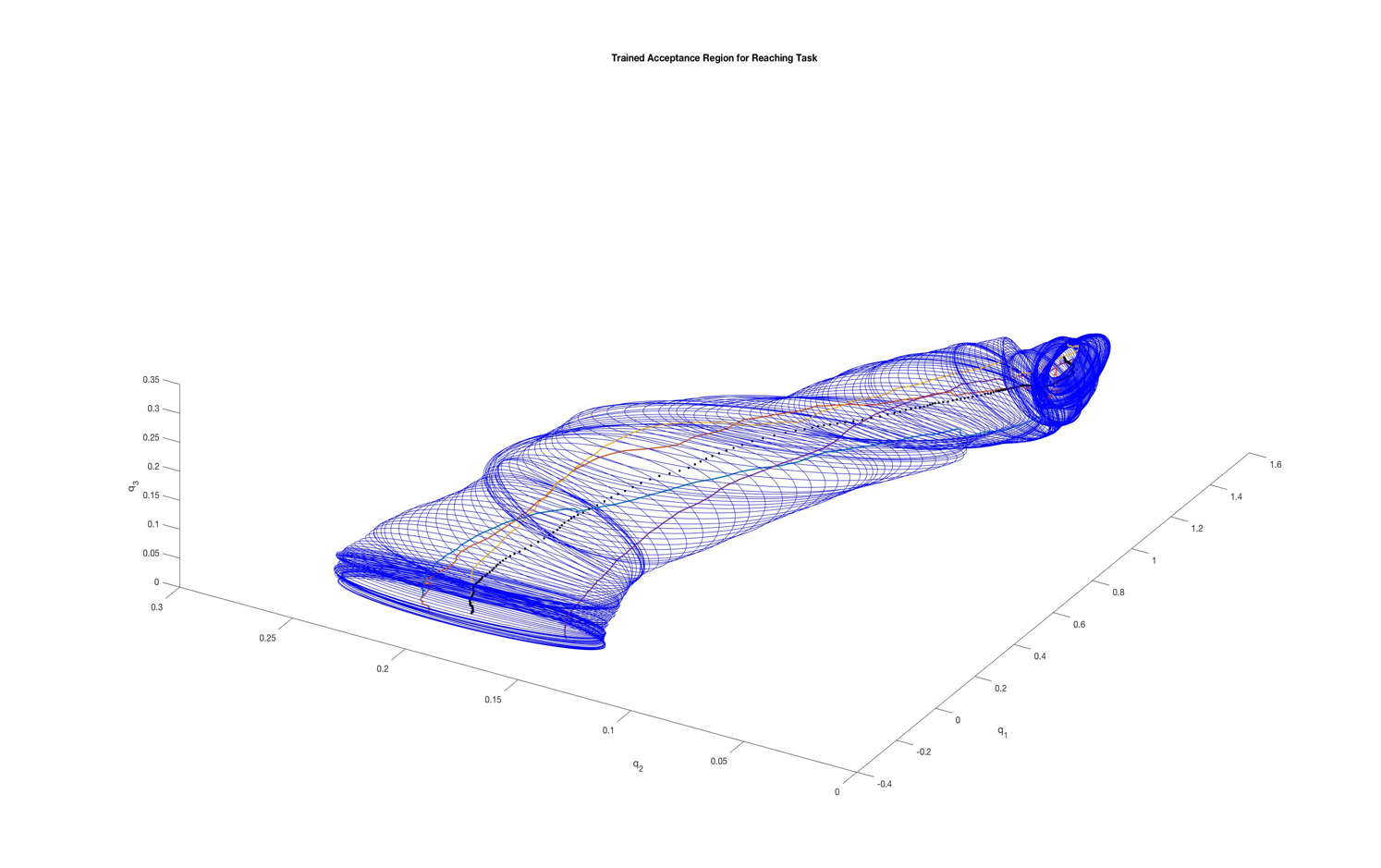

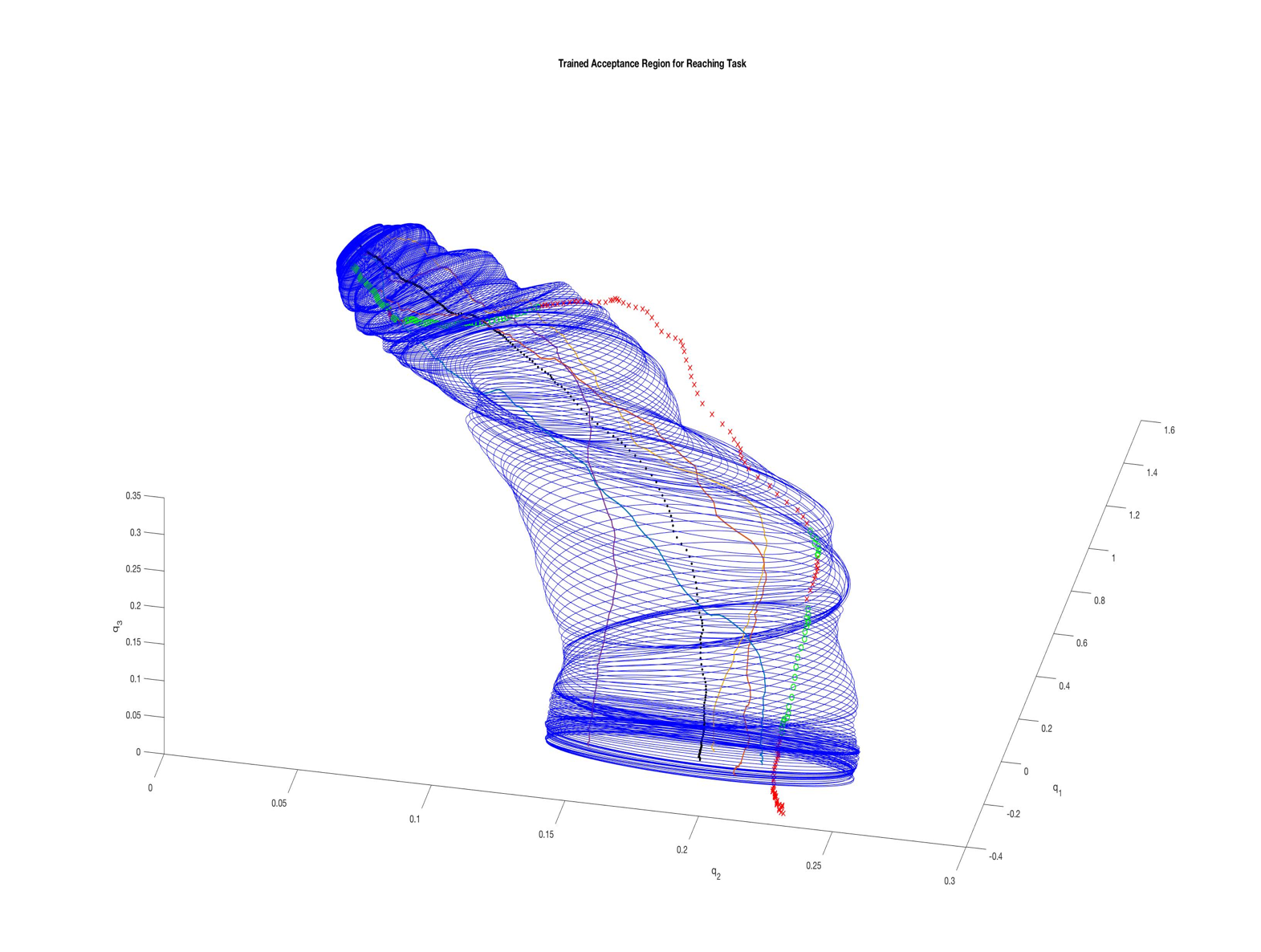

This is the motion boundary that we obtained after 10 correct demonstrations.

Detection

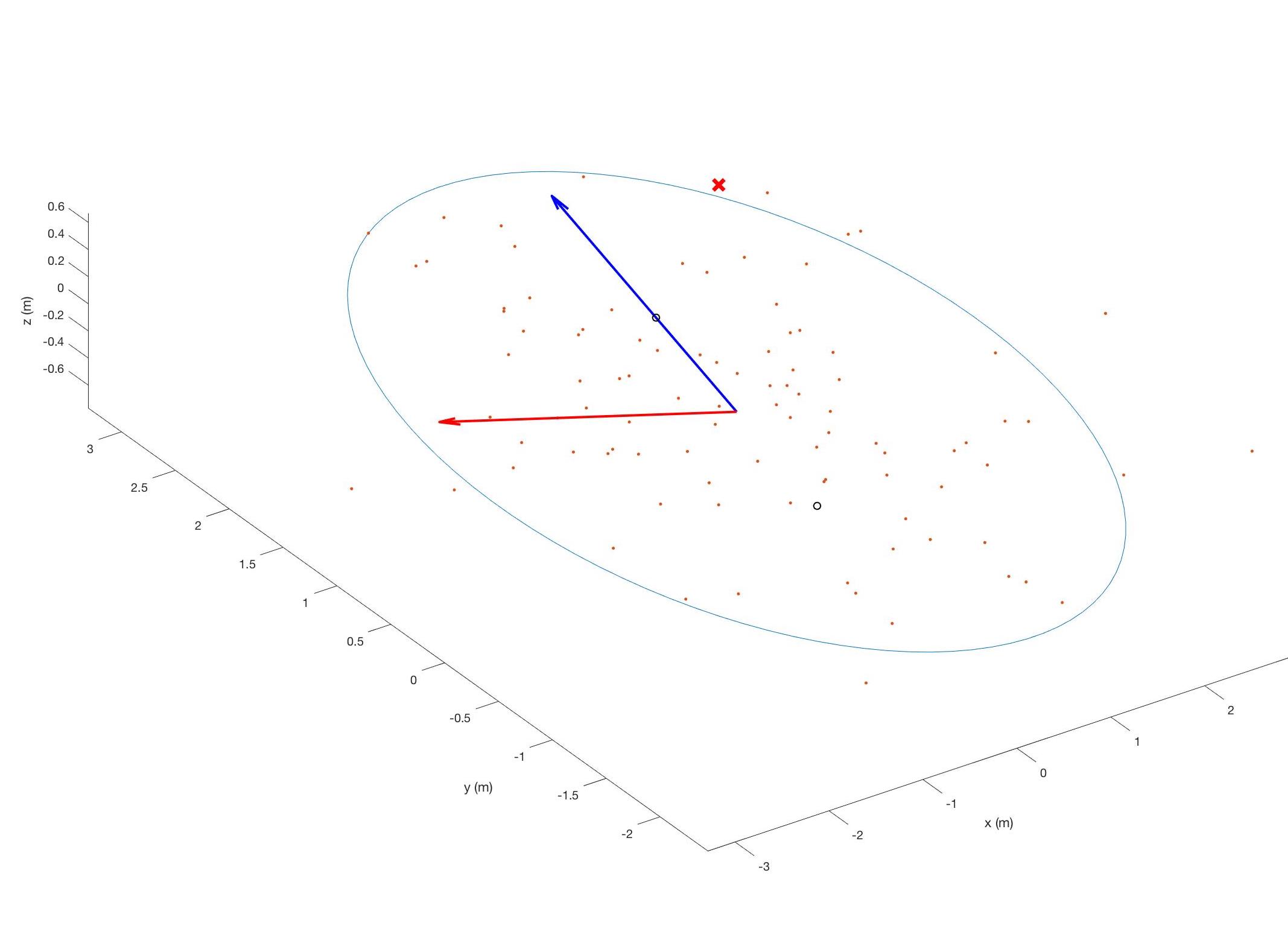

How do we detect patient’s motion and pipe to BAXTER? Well, we decided to use IMU to track patient’s arm motion. Once we have real time patient hand position. we cut the 3D space into a plan and project the hand position into that plane. Now we just need to check whether the hand position is inside the accepted motion range. Pretty simple…

This is a correct hand position(green circle):

This is an incorrect hand position(red cross):

Algorithm

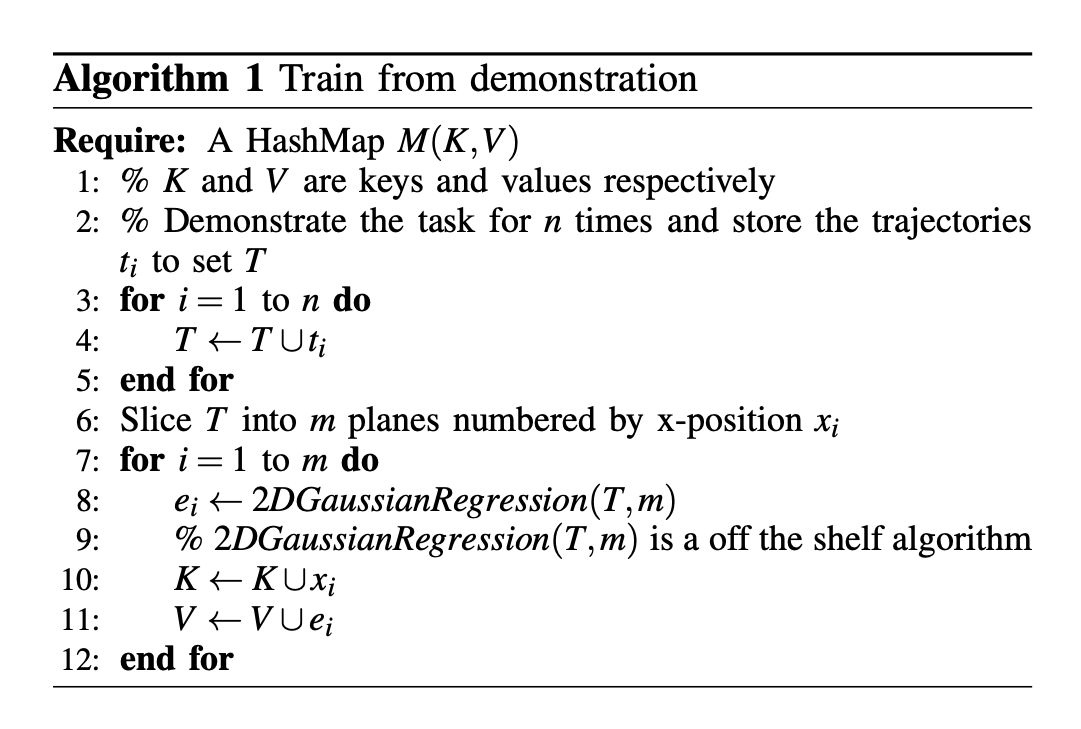

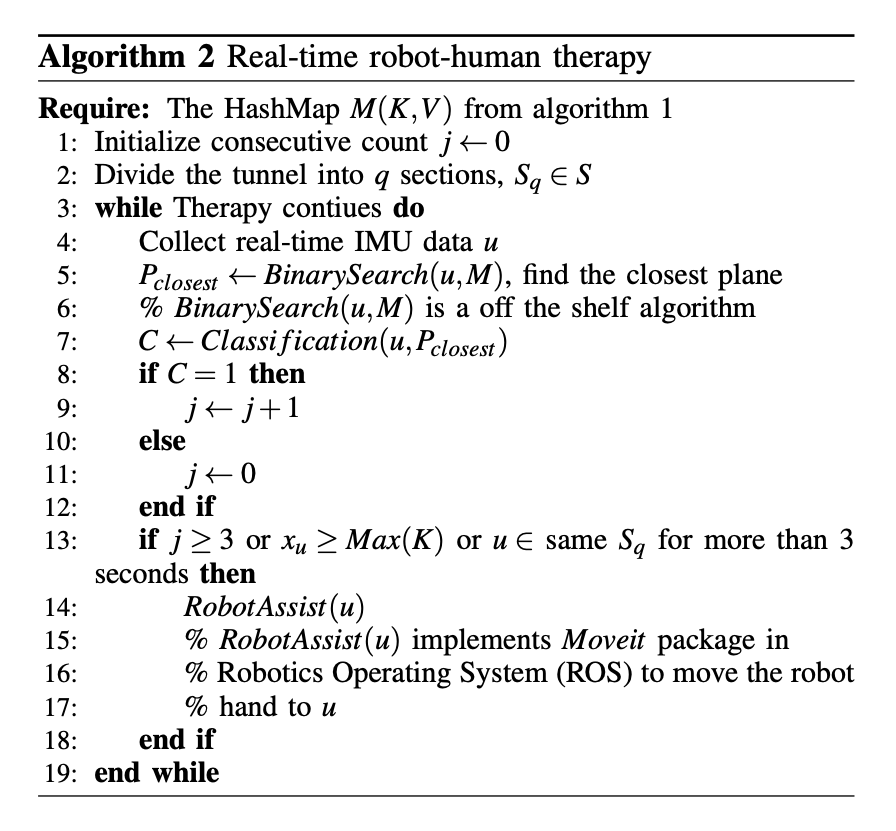

Now, we almost have everything. How do we actually tell BAXTER to help? Well, we use ROS(Robot Operating System) as platform(just as almost all robots…). Two algorithms are written and published in ROS to achieve autonomy in robot-human therapy.

- Train from demonstration

- Real-time robot-human therapy

Testing

It is still too dangerous to conduct the test on real patient, so the test was done on me :). Guess what? Everything worked like magic!

Here is a trajectory(red and green line) that I made during my therapy with BAXTER’s help.

Paper

If you are interested in our detailed work, you can download our paper:

Towards Data-Driven Autonomous Robot-Assisted Physical Rehabilitation Therapy

Great work, BAXTER!

Oh I really miss my old friends. Hope you are all well in Philly!

Written on March 2nd , 2019 by Ted Wang